Intro to GitOps

At the core GitOps can be seen an operating model for developing and delivering Kubernetes-based infrastructure and applications. Here the core principle is that Git is the one and only source of truth for the current deplyoment state, Infrastructure and Applications. That means the Git status describe the desired state of the whole system in a declarative manner for each environment being a system of record. In other words Git is a combination of IaC and CI/CD and works best in a teams with a DevOps culture.

But there is of course some depth to this topic, and one need to have made certain experiences to understand the benefits of the GitOps conception and like with most things, you need to make you own experiences. To fasten your experience and get faster to the right questions and answers I would like to share the steeps of the evolution towards GitOps that I recently observed.

The following is a experiences compilation from several teams, several clusters.

Level 0: The dark yaml genesis

Meanwhile relatively long time ago, as soon as Kubernetes was installed but not really explored and understood, the team had to experiment with Kubernetes resources and API. People of very different experience wanted to make first deployments and explore things like how to get the logs and how to call a service from the outside world.

In this era the Kubernetes resources where places into .yaml files and stored chaotically.

Level 1: GitOps like repository

The chaos was not an option, so the team developed two approaches. One was a central repository that defines the environment state and another one where k8s resources where checked in alongside with the code of the service they controlled.

Interesting, but maybe not a very surprizing, observation was that Operation-minded people recognized the need of one Git repository that defines the state of the environment from beginning. Whereas most Dev-Minded heads where concerned a lot about “another one repository only for yamls?” ¯_(ツ)_/¯. Yes, DevOps mindset was not on the level in the observed set-up, as you might expect ;)

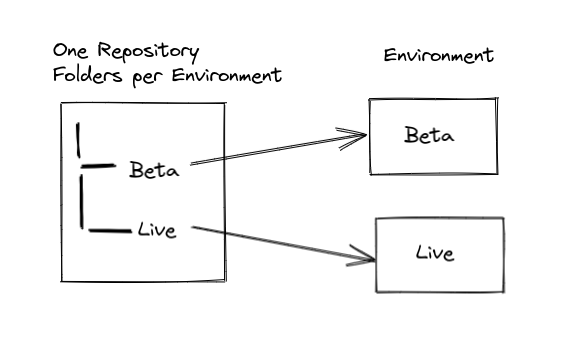

However this era ended up with only one GitOps repository and Sub folders per environment.

Master branch was chosen to describe the state of the environments.

Level 2: GitOps like deployment with a script

Having a GitOps like repository is kind of achievement, but still all actions and deployments where manually. So very fast a custom script was invented. Find below a historical variant of it:

#!/bin/bash

#set -x

if [[ $1 == "beta" ]]; then # checks if first argument is beta string

CLUSTER_NAME="BetaCluster"

CLUSTER_DIR="beta-cluster"

elif [[ $1 == "prod" ]]; then # checks if first argument is prod string

CLUSTER_NAME="LIVE_Cluster"

CLUSTER_DIR="live-cluster"

else # if no (or no valid) argument is given an error will be throws and the programm will be aborted

echo "Please provide cluster 'dev', 'beta' or 'prod'";

exit 1; # exit due to wrong argument

fi

CLUSTER_DIRS="-f ./$CLUSTER_DIR/ -R"

echo "You are about to deploy to: $CLUSTER_NAME "

echo "Your kubectl context is:"

kubectl config current-context # displays the actual cluster name

# changes in the right cluster to avoid deploying to the wrong cluster

read -p "Confirm switch your current cluster context to $CLUSTER_NAME [y] ?" clusterConfirm

case $clusterConfirm in

[yY]* ) kubectl config use-context $CLUSTER_NAME;;

* ) echo "ERROR: Context has to be correct in order to deploy to it"

exit 4;; # exit because current cluster might not be set correctly

esac

echo "Showing the DIFF:"

kubectl diff $CLUSTER_DIRS # checks the diff between chosen files and the kubernetes cluster

read -p "Confirm to apply the change! [y]" input # command prompt for confirmation

case $input in

[yY]* ) kubectl apply $CLUSTER_DIRS;; # checks if input is y

* ) echo "user abort"; exit 2;; # else the action will be aborted due to no non-confirmation

esac

exit 0 # program exit

usage is simple:

./deploy.sh dev #reconcile dev environment

This simple automation step, was however effective. First it worked from everyone’s laptop with the same configuration of Kubernetes cluster and access to it. Environmental assumptions where made of course. Same naming like BetaCluster, folder structure, access to clusters by everyone.

I’ll summarize what the script actually does:

- Warns you about context switch

- Do context switch

- Do

kubectl diffshowing the diff of the YAMLs in environment folder and the deployed state - Apply the new desired state (after confirmation)

Whereas this workflow is very simple, it already very enabling. I’ve observed that, people where forced to use kubectl diff and understood the idea of central repository. It provides also very fast feedback, so people could debug in dig into the things from their laptops - how they used to do anyway.

So learning where quicker onboardings of new team mates where faster and some level of Environment stability was guaranteed. So we used it do provison production environment as well.

However also this setup has it’s obvious limitations. Just to name some of them:

- The commit to repository is not coupled to deployment, still manual step, that will be forgotten.

- Not a full reconciliation. Resources are not deleted in cluster if deleted in repository (not always bad)

- No automatic reconciliation. Manual triggering.

- Not (good) support for helm

- A need to provide access to all managed clusters by all involved people.

However it was as step forward and the team learned a lot. However it could not be a final step, so we moved forward towards real GitOps.

Level 2.1: Half-automated

Just for the sake of completeness I would like to mention that. I’ve seen some individual attempts to build up on level 2 by adding more rules or by utilizing the script in a CI/CD manner. But non of them lead to a really new level or improved the basic idea without introduction of new problems, so we moved forward and as soon, heads and heads got a free minute we have done the final step.

Level 3: GitOps with Flux

In my opinion there a two main GitOps tools out there you just can’t ignore.

However after a very short evaluation, we found Flux v2 (GitOps Toolkit) to be minimal and flexible enough to feed our current needs completely. So let’s spend deep dive here in some details.

Getting started

The first nice thing about Flux is that you can bootstrap it with the CLI. Sp the migration from the level 2 to Flus was very smooth:

- Install Flux (it created even reposiotory)

- Copy your existing YAMLs to it.

- Add some advanced Flux configuration

Here is example how we install Flux with repository being on the GitLab Cloud:

# How to get GitLab token: https://docs.gitlab.com/ee/user/profile/personal_access_tokens.html

export GITLAB_TOKEN=<your-token>

flux bootstrap gitlab --owner=mycompany/mygroup --repository=flux --branch=master --path=eks_DevCluster --components-extra=image-reflector-controller,image-automation-controller --token-auth

# Output..

► connecting to gitlab.com

✔ repository created

✔ repository cloned

✚ generating manifests

✔ components manifests pushed

► installing components in flux-system namespace

namespace/flux-system created

customresourcedefinition.apiextensions.k8s.io/alerts.notification.toolkit.fluxcd.io created

customresourcedefinition.apiextensions.k8s.io/buckets.source.toolkit.fluxcd.io created

customresourcedefinition.apiextensions.k8s.io/gitrepositories.source.toolkit.fluxcd.io created

customresourcedefinition.apiextensions.k8s.io/helmcharts.source.toolkit.fluxcd.io created

customresourcedefinition.apiextensions.k8s.io/helmreleases.helm.toolkit.fluxcd.io created

customresourcedefinition.apiextensions.k8s.io/helmrepositories.source.toolkit.fluxcd.io created

customresourcedefinition.apiextensions.k8s.io/kustomizations.kustomize.toolkit.fluxcd.io created

customresourcedefinition.apiextensions.k8s.io/providers.notification.toolkit.fluxcd.io created

customresourcedefinition.apiextensions.k8s.io/receivers.notification.toolkit.fluxcd.io created

role.rbac.authorization.k8s.io/crd-controller-flux-system created

...

✔ install completed

► generating sync manifests

✔ sync manifests pushed

► applying sync manifests

◎ waiting for cluster sync

✔ bootstrap finished

So when ready, it creates the new Git repository (The GitOps repository), installs flux controller to the desired cluster and provisions basic Flux config into the flux GitOps repository. So after this one command you’re have working GitOps Flux Operator withing GitOps repository you can start working with, i found it very convenient.

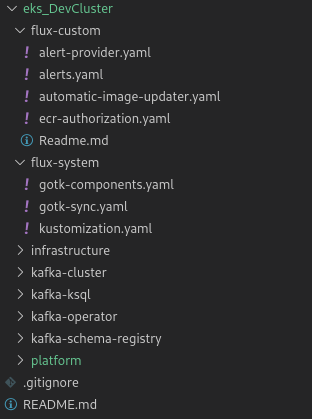

The cluster resources are going into the eks_DevCluster folder in the current example. Flux itself was installed into eks_DevCluster/flux-system and has created a namespace flux-system in the cluster where Flux run-time components are deployed then.

Finally you can copy your existing resources (see level 2) in to the cluster folder and the migration is happening even without restart of the services. Find example folder structure in relative simple scenario

here flux-custom is used by the team to define further Flux resources like Slack alerts

Flux Notifications configuration

Notifications is probably the first thing you would like to configure. Flux bring two CRDs for it Provider and Alert

# Flux confg

apiVersion: notification.toolkit.fluxcd.io/v1beta1

kind: Provider

metadata:

name: slack

namespace: flux-system

spec:

# Post alerts to Slack Channel #flux-dev

type: slack

address: 'https://hooks.slack.com/services/11111/2222/22234xxxyy'

channel: 'flux-dev'

Not alert configurations can be defined referencing the flux.

# Autor: aho

apiVersion: notification.toolkit.fluxcd.io/v1beta1

kind: Alert

metadata:

name: tracing-alert

namespace: flux-system

spec:

providerRef:

name: slack # Reference to Privder

eventSeverity: info #Severity

eventSources: # Resource Filters

- kind: GitRepository

name: '*'

- kind: Kustomization

name: '*'

By the way this is pretty verbose configuration.

Automatic image update feature

This feature is still alpha

However this is absolutely nice feature.Once got image Auto-update configuration working, you can annotate your YAMLs a bit and Flux will automatically replace your config with the name of the Docker image it founds in the Docker registry according to your rules.

Here is small example

apiVersion: apps/v1

kind: Deployment

metadata:

name: example-service

labels:

app: example-service

namespace: "dev"

spec:

replicas: 1

selector:

matchLabels:

app: example-service

template:

metadata:

labels:

app: example-service

spec:

containers:

- name: example-service

image: 11111111.dkr.ecr.amazonaws.com/example-service:20210101-09-32-29 # {"$imagepolicy":"flux-system:example-service-service"}

ports:

- containerPort: 80

alongside with the ìmagepolicy config

---

#Autor: aho

apiVersion: image.toolkit.fluxcd.io/v1alpha2

kind: ImageRepository

metadata:

name: example-service

namespace: flux-system

spec:

image: 11111111.dkr.ecr.amazonaws.com/example-service

interval: 1m10s

spec:

secretRef:

name: aws-registry-secret # see ecr_authorization.yaml#cron

---

apiVersion: image.toolkit.fluxcd.io/v1alpha2

kind: ImagePolicy

metadata:

name: example-service

namespace: flux-system

spec:

filterTags:

pattern: '\d{8}-\d{2}-\d{2}-\d{2}'

imageRepositoryRef:

name: example-service

policy:

alphabetical:

order: asc

What it all does is that Deployment resource will be updated in version as soon as new docker image is pushed to the 11111111.dkr.ecr.amazonaws.com/example-service tagged according to pattern \d{8}-\d{2}-\d{2}-\d{2}, meaning the newest date tag will be taken.

This new resource will be committed to the GitOps repo by Flux and subsequential the status of GitOps repository will be reconciled to the cluster.

How cool is that? All history is preserved and CD is possible if needed in every flexibility.

P.S. Given examples where working with Flux 13.x. However it’s only excerpt consult (flux docs) or let me know in the comments that the complete example would be interesting here.

Flux community and support

I’ve personally found a very vital Flux community on a CNFC Slack Flux Channel that is really able to help with a concrete issues you might have.

Summary GitOps with Flux

Let sum up on what we have on level 3 or full GitOps level with Flux v2:

- One real GitOps repository as system of record and as source of truth for the desired cluster environments

- Automatic reconciliation also for deleted resources.

- Configurable notifications to slack (and other tools)

- Deployment by commit

- Flexibility in deployment pipelines (see image updates)

Feel free to share your opinions in comments below. I would like to learn more about your GitOps journeys too.